Architecture Assessment#

The architecture assessment examines the product’s components at both the system and software levels to develop a picture of risk and risk mitigation to answer the question “how well-designed is the architecture underlying the product?”.

The architecture assessment is designed to evaluate the product’s architectural support for the RABET-V security control families. This evaluation produces an architecture maturity score for each security control family and identifies the components that provide each security service. This score does not measure how well the product executes the security service (i.e., its implementation score), just how mature the architecture is that supports each security service. (Note: At this time, the architecture assessment only reviews security services; when there are non-security control families implemented in the RABET-V process, this will be revisited.)

The architecture maturity scores and component mappings are used to help assess the risk that changes to the product will negatively impact the security services. These are used in the test plan determination to identify how to test the product changes. Higher architecture maturity scores, in conjunction with organizational maturity scores, may indicate that less testing is needed to validate that changes have not created increased risk in the product.

The architecture assessment identifies the product components at the system and software levels that expose functionality, and the security services that protect those functions. Security service components are classified as composite or transparent. A composite security service component requires some level of implementation in the software (e.g., encryption or input validation). A transparent security service component requires no integration with the software; examples include firewall, transparent disk encryption, and physical security.

This activity also addresses the system and software architecture viewpoints. The system level diagram(s) identify the larger components of the environment used to host and manage the software application(s). The software level diagrams identify the components a layer deeper into the software application(s).

The architecture assessment will result in a score for each of the control families and an overall score. To achieve a verified status in the architecture assessment, an architecture would need to score high enough to meet or exceed the baseline score defined for each security control family and the overall score.

Architecture Assessment Methodology#

For more information about what is expected for the architecture assessment, see the provider submission activity and the RABET-V security requirements.

Inputs#

The RTP processes their source code through designated software bill of materials (SBOM) and software architecture analysis tools: Mend and Lattix). For more information about what is expected for the architecture diagrams and description, see the Provider Submission activity

The security control families provide guidance as to the needed controls to help protect the product and related data

The architecture maturity rubric was created to help score the product architecture in the categories of reliability, manageability and consistency, maintainability (comprised of modularity and isolation), and depth of control coverage (i.e., defense-in-depth)

The staff of the RTP will be interviewed during several tasks, including the system and software architecture assessment

Inputs provided by the output of prior tasks are described in the narrative below

Outputs#

Architecture maturity workbook containing an executive summary tab, system level diagram(s), and architecture scoring

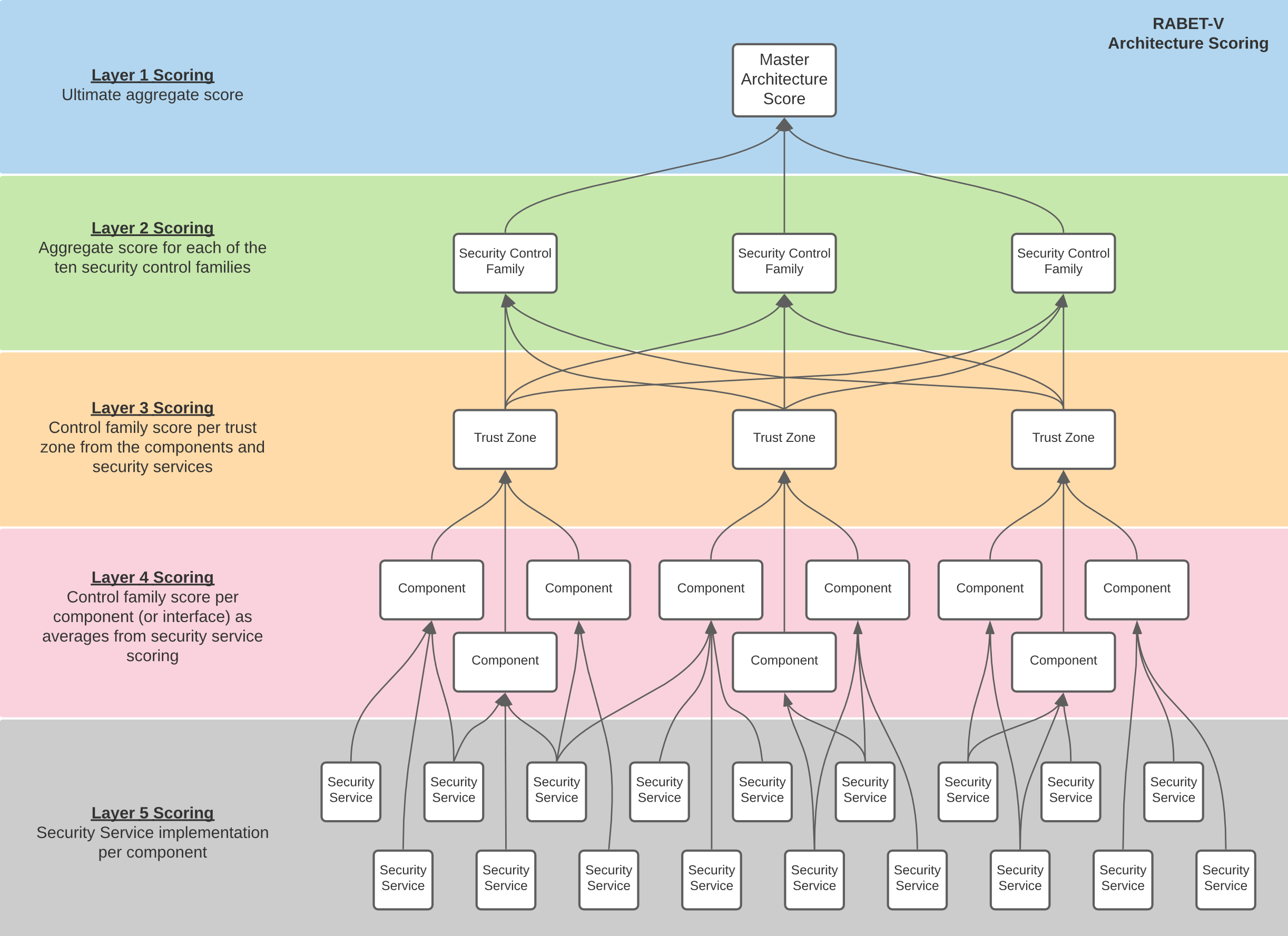

Architecture maturity scores based on the maturity scoring rubric: architecture is assigned scores at various levels for each security control family which corresponds to how well it supports the mitigations within that family. These scores are calculated at five layers, starting at the most detailed level of security service implementation per component or interface and rolling up to result in a master architecture score

Software architecture report identifying the components of the system and how the security services are used in relation to those components

Interstitial outputs between tasks are described in the narrative below

Workflow#

Tasks#

Perform System Architecture Assessment

The system level assessment takes the provider submitted architecture documentation as input along with interview sessions with individuals that possess knowledge about the system and software architecture. The security control families used by the application are enumerated.

Outputs:

Security Service Listing

System Architecture Diagrams

System Level Scores

Perform Software Composition Analysis

Software composition analysis looks at the third party libraries used by the product, including licenses, maintainers, and known vulnerabilities. RTPs submit a Software Bill of Materials (SBOM) in an approved format for analysis against vulnerability databases. This task produces reliability scores for some security services.

Outputs:

Reliability Scores

SBOM

Perform Software Architecture Analysis

Accredited assessor organizations analyze the software architecture using architectural analysis tools and interviews. RTPs run Lattix against each codebase and submit to RABET-V for further analysis. Interviews are conducted to confirm the existence of security services and analysis by accredited assessor organizations.

Inputs:

Lattix (LDM) files

SBOM

Outputs:

Software Level Scores

Build Architecture Model

Assessors create an architecture model containing the components, trust boundaries, and interfaces identified during the system and software architecture analysis. Security services scores at each point of use (e.g. component, trust boundary) are assigned.

Inputs:

Reliability Scores

Security Services

SBOM

Software Level Scores

System Architecture

System Level Scores

Outputs:

Architecture Review Report

Point of Use Scores

Construct architecture review workbook

Import scoring from the scoring instrument and run calculations. Thoroughly review the updated scores, looking for missing or incorrectly scored controls. Address any discrepancies by checking notes or consulting with the RTP.

Inputs:

Depth Score

Point of Use Score

Outputs:

Consolidated Architecture Scores

Submit and Review Assessment

Upload all documents to the RABET-V Portal and circulate for feedback with the RABET-V Administrator and RTP.

Inputs:

Architecture Review Workbook

Analyze Third-Party Component Details

The third-party component details describe the RTP’s approach to managing supply chain risk. This includes whether the organization has selected third-party software components with a history of known vulnerabilities, and how the organization maintains traceability and assurance of third-party and open-source software throughout the lifetime of the software.

When considering parts of the overall solution that are not developed internally, each unique version of the following will be considered an individual component of the system:

Operating System

Framework

Third-party API

Embedded Third-party Library

Hosting Software/Service (e.g., IIS, Docker, Elastic Beanstalk, Azure App Service)

Database (stored functions and procedures will be treated as a part of the software application)

File Storage System/Service

Network Appliance (virtual or physical)

External Device Driver/Firmware

A replacement or major version change to one of these components will be treated as a change type subject to iteration testing per the test plan determination.

The RTP should detail initial and ongoing vetting procedures for third-party providers and components (if not covered in the process descriptions), including open-source software and libraries. Vetting should include fit for the provider as well as security and reliability. Management of third parties includes the approach to policies, service level agreements (SLAs), reputation, maintenance, and past performance of third-party software and services.

Third-party libraries will be processed through automated SBOM tools. RTPs are required to facilitate the ingestion of software libraries through designated tooling. RTPs should ensure these tools are permissible within their environments and should contact the administrator with any questions about the tools.

Architecture Maturity Rubric#

The architecture assessment results in maturity scores that indicate how well the product’s architecture is built to support each security service. These scores do not indicate the quality of the security services used, but how well the architecture is designed to resist attacks, protect data and functionality, and accommodate changes without impacting the security services used.

The architecture maturity rubric provides a maturity score for each of the ten security control families. The scores range from 0 to 3, where 3 is the best.

The architecture maturity rubric scores across the four measures below.

Reliability#

The component (or the substantial logic thereof) is provided by a reputable party and actively maintained.

0 – Unvetted component, written in-house with minimal documentation or third-party component that is uncommon and/or not actively supported

1 – Vetted component used, but may not be a current version or actively supported

2 – Mature, vetted component used with multiple active contributors; configured by secure best practices/guidelines

3 – Mature, vetted component used that is actively supported or approved by a professional community/organization, and is enforced by technical or procedural controls

Manageability and Consistency#

The component is: centrally managed by the provider, configurations are tuned with best practices, configurations are enforced, and the configuration is under full change management with attribution.

0 – Component does not exhibit any of the criteria

1 – Component exhibits one or two criteria

2 – Component exhibits three of the criteria

3 – Component exhibits all four criteria

Maintainability: Modularity#

The component is segregated from other components at the system level and dedicated to providing its security service

0 – no segregation, not separated into own library

1 – separated into a library (inclusive of namespace segregation)

2 – separated process, same execution environment as a protected component

3 – separate unit of deployment (cloud service, or physically)

Maintainability: Isolation (Composite Service Only)#

Access to the security service component is mediated through a central software component.

Depth#

A component is segregated from other components and reusable inside other components. Components are complementary to provide a consistent, layered defense for the overall system. There should not be multiple versions or flavors variations of the security service component unless absolutely necessary.

0 – Components coverage is lacking and/or haphazardly applied

1 – Component coverage has gaps, is managed inconsistently, and is not segregated

2 – Component coverage has minimal gaps, some layering and segregation, and part of a repeatable process

3 – Components are intentional, built into layers, part of a repeatable/auditable process, and tested regularly

Rubric Configuration#

Each use of a security service is scored separately (except Depth). For example, if Log4Net and EnterpriseLibrary.Logging were used as logging and alerting services, each would be scored separately across the measures below.

Scoring is based on three measures, with maintainability broken down into modularity (for system-level services) and isolation (for software-only or composite services). Depth is scored once per security service type, at the aggregate control family level only.

Type |

Reliability |

Consistency |

Modularity |

Isolation |

Example |

|---|---|---|---|---|---|

Transparent |

x |

x |

x |

Firewall |

|

Composite |

x |

x |

Service Only |

Software Only |

Azure AD integrated with App |

Rubric scoring is applied to each security service at its point of use. If the same security service is used by different components, it will receive separate scores. Scores are rolled up by trust boundary, then by security control family; finally an aggregate score is derived.

Architecture Baseline Scoring#

RABET-V uses baseline scoring in organizational, architecture, and product verification to determine whether a product is “Verified,” “Conditionally Verified,” or “Returned.” The architecture baseline is a combination of a minimum score for each of the ten security control families along with a minimum overall baseline maturity score.

The overall baseline for the architecture maturity score is 1.50.

The table below lists the baseline scores required for each of the security control families that are measured in the architecture assessment, along with the overall score to be considered for the verified status.

Security Control Family |

Baseline Score |

|---|---|

Authentication |

1.50 |

Authorization |

1.50 |

Boundary Protection |

2.00 |

Data Confidentiality and Integrity |

1.50 |

Injection Prevention |

1.25 |

Logging Alerting |

1.50 |

Secret Management |

1.50 |

System Availability |

1.50 |

System Integrity |

1.50 |

User Session |

1.25 |

——————————————– |

—————— |

Overall Score |

1.50 |