Organizational Assessment#

The organizational assessment measures the quality of a registered technology provider’s (RTP) product development practices to answer the question “how good is the organization at developing technology products?”.

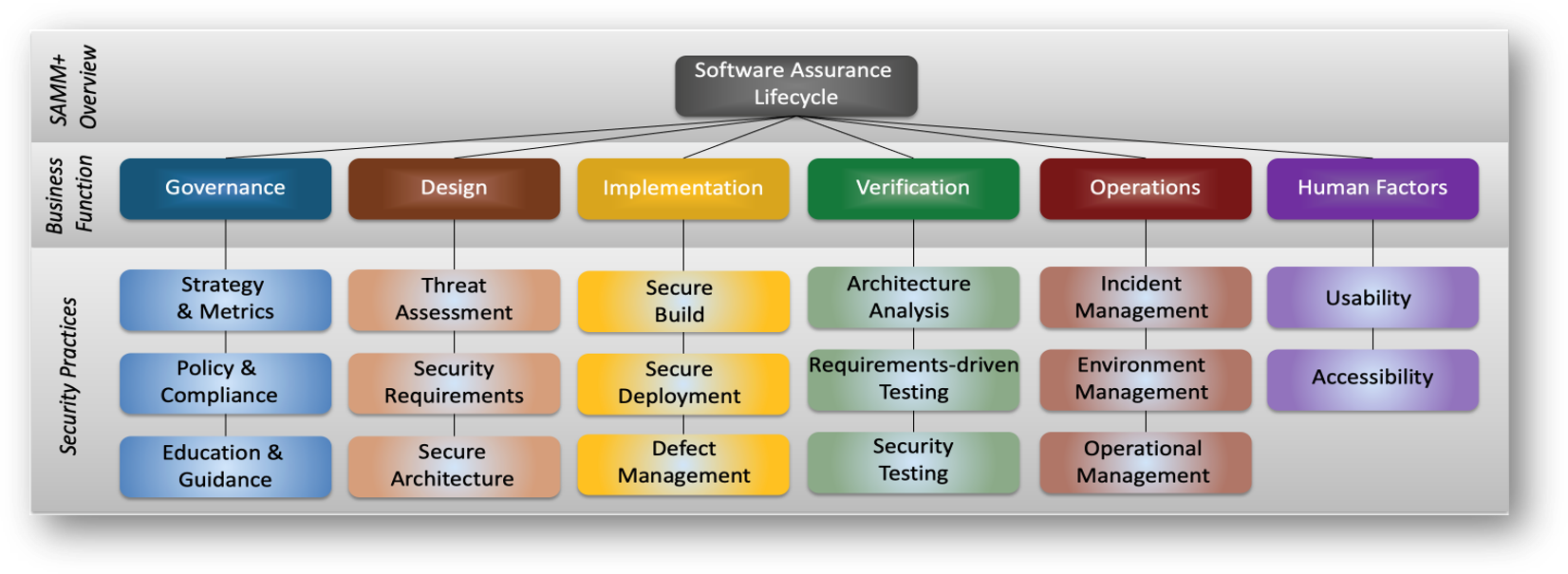

It provides organizational maturity scores for the RTP. It uses OWASP’s Software Assurance Maturity Model (SAMM) as the basis for its evaluation, expanding the SAMM model to include practices and activities for a human factors area that include usability and accessibility. Thus, the six areas in the organizational assessment are:

Governance

Design

Implementation

Verification

Operations

Human Factors

In addition to providing the maturity scores, the organizational assessment determines the reliability of RTP-generated artifacts that can be used by RABET-V. By using reliable RTP-generated artifacts, the RABET-V process will not have to reproduce these artifacts (i.e., test results).

The organizational maturity scores and reliability of RTP-generated artifacts are used to help determine the types of testing conducted by RABET-V for product revisions. The organizational maturity scores are combined with the architecture maturity scores to support risk based testing in the product verification step.

Organizational Assessment Methodology#

For more information about what is expected for the organizational assessment, see the provider submission activity and the RABET-V security requirements.

Inputs#

Process descriptions

Interviews with RTP

Outputs#

Organizational maturity scores

List of product development artifacts usable for verification

High level executive summary of the process, findings, organizational maturity score, and tailored recommendations

Completed organizational assessment toolbox

Workflow#

Review Existing Documentation#

An accredited assessor reviews existing documentation submitted by RTPs, including:

Policy and compliance documents that are related to or help define efforts related to acquiring, managing, designing, developing, testing, and supporting software at the organization

Process related documents that help define which processes the RTP follows related to software activities

A representative sample of artifacts from completed activities related to the above policy and compliance or process related activities

Discussion Sessions#

An accredited assessor leads discussions with the different roles supporting the efforts related to the RTP’s software development process. The discussions will last approximately 60-90 minutes. Sessions are driven by the organizational maturity rubric and are not checklist-based, but discussions on how processes and procedures are implemented and conducted throughout the organization.

Below are some of the common organizational roles held by individuals that would be interviewed:

Application/software security lead or equivalent party with responsibilities for defining and managing the integration of security into software

Business analyst or similar role with responsibilities related to requirements, user stories, etc.

Project manager or similar role with responsibilities for guiding teams through the processes to develop, acquire, and maintain software

Application architect or similar role with responsibilities to ensure good design and architecture for applications

Developer or similar role that has responsibilities to write code and some testing

Quality assurance/tester or similar role that handles the primary testing for software or applications

DevOps engineer or similar role with responsibilities related to build and deployment processes for software

Incident response/support or similar roles with responsibilities for helping support, triage, respond to issues in production systems

Determine Artifact Reliability#

RABET-V can expedite product verification if certain software development artifacts are found to be reliable. When artifacts are found to be reliable, the RABET-V process may use them instead of reproducing similar artifacts and tests. However, this does not mean RABET-V must use them. The RABET-V process may include reproducing the results submitted by the RTP in order to validate the artifacts are reliable.

The organizational assessment is used to help determine if the following artifacts are accurate and consistently available for RABET-V iterations. If the RTP has additional software development artifacts that it believes are reliable and beneficial to streamlining the RABET-V process, it may request those artifacts to be evaluated and the test plan updated to account for the artifacts.

Change List#

The change list is the most important software development artifact used by RABET-V when performing product verification in a revision iteration. It is critical that the list is accurate, detailed, and complete. While RTPs can submit manually generated change lists, they may take longer to process than automated change lists built from the central source code repository and reviewed by system architects and product owners.

During the organizational assessment, the method used for building change lists will be discovered and sample change lists will be reviewed for accuracy and completeness. If the change list is determined to be reliable, the RABET-V process will use the RTP’s change list and not generate its own. If the change list is not reliable, the RABET-V process will explore other ways to produce an accurate change list, which may take additional time and resources.

Automated Configuration Assessments#

Security configurations are a major part of ensuring that systems contain properly implementing security controls. Using configuration guidance, such as the CIS Benchmarks, leads to consistent security outcomes. Automated configuration assessment tools, such as the CIS configuration assessment tool (CIS-CAT), can ensure guidance is being followed for every release.

During the organizational assessment, the assessor will determine if the RTP is subscribed to configuration guidance and if they are using a reliable assessment tool. If so, the results of the assessment tool will be used during RABET-V iterations to verify certain requirements. If this artifact is not present or reliable, the product verification activity will have to perform additional testing to verify secure configurations.

Automated Vulnerability Assessments#

Automated vulnerability assessments check system components for known vulnerabilities. These assessments primarily check third party components for known vulnerable versions of software. If deemed appropriate by the organizational assessor and the Administrator, RTPs that are regularly performing automated vulnerability scans on the product networks and software will have their results used during the Product Verification activity in lieu of RABET-V reviewer performing new scans. During the organizational assessment, reviewers will investigate the scope, frequency, and tooling used by the technology provider to determine if there is sufficient coverage and accuracy.

Automated Unit Testing#

Automated unit testing is a way to regression test large and complex applications efficiently. It takes significant investment on the part of the RTP to build test suites that are robust and accurate. For RTPs that have invested in this capability, the results of their internal testing can be used to partially offset RABET-V product verification. The organizational assessment will look at the coverage and depth of the current automated testing routines, as well as the RTP’s commitment to maintaining its test suites.

Third Party Security Analysis#

RABET-V strongly encourages RTPs to receive regular, in-depth security audits on their systems. For example, there are audits that focus on hosting security and application security. These audits, if performed against a reliable standard and performed recently, can be used in RABET-V in lieu of repeating similar evaluations.

Organizational Maturity Rubric#

The organizational assessment measures the maturity of the RTP’s software development processes for security and usability. It results in an organizational maturity score, that is based on the OWASP Software Assurance Maturity Model (SAMM).

Maturity scores are provided for each of the 17 software development areas (15 SAMM plus usability and accessibility). The scores range from zero to three, where three is the best.

As with each of the assessment modules, the Organizational Assesment has a baseline defined that will determine whether or product will be Verified or not by the RABET-V process. The baseline is a combination of minimum scores for a subset of questions, and an overall maturity score that needs to be met or exceeded.

Accessibility#

Accessibility is often overlooked as a development priority. It may be hard for developers without a disability to conceptualize needing or using accessibility features, but it’s easy to find examples that may be possible for anyone to imagine. For example, some software developers developed repetitive stress injuries and turned to speech-to-text aids to continue working in their profession. Beyond the general necessity, adhering to accessibility standards is often a hard requirement for software solutions in many state systems.

Accessibility Maturity Levels |

Quality Criteria |

Required Activity |

|---|---|---|

Level 0 |

||

Level 1: Automated conformance to accessibility guidelines |

Performs automated accessibility validation during development. |

Use automated testing tools during development for:

|

Level 2: Testing with accessibility tools |

Perform accessibility tests with commercial accessibility software and OS-specific features, including using personas and scenarios |

Use commercial software, OS-specific features, and personas and scenarios for:

|

Level 3: Formal accessibility testing and analysis program |

Use of research methods and experts to test prototypes with users that have accessibility needs. |

Conduct accessibility testing and integrate results for:

|

Usability#

Usability testing and analysis helps bridge the gap between a solution that meets a set of requirements and a solution that meets the needs of the organization, people, and processes. Meeting usability objectives is the distinction between a solution that people want to use (i.e., meets a set of requirements and usability needs) versus one they don’t (i.e., solely meets a set of requirements).

Users will attempt to reduce friction in completing their desired task. A poorly designed user experience will result in users finding workarounds, often circumventing well-intentioned security controls. For a product to achieve the risk mitigation intended by its requirements, it must integrate usability principles with security controls and, thus, an organization’s maturity in implementing usability is critical to its security outcomes.

Usability Maturity Levels |

Quality Criteria |

Required Activity |

|---|---|---|

Level 0 |

||

Level 1: Formally established feedback loops with customers |

Established processes for receiving feedback from customers and incorporating that feedback into the product |

Incorporation of feedback into products for:

|

Level 2: Deploy enhanced feedback capabilities |

Interview users, accept feedback directly through the product, collect logs and analytics through the product, or other similar approaches; from these, product form reports on findings and plans for incorporating feedback |

Use commercial software, OS-specific features, and personas and scenarios for:

|

Level 3: Formal usability testing and analysis program |

Formal research on the business processes and users’ behaviors, and conduct usability studies with users interacting with a prototype or version of the software solution. |

Conduct formal usability testing and integrate results for:

|

Organizational Baseline Scoring#

RABET-V uses baseline scoring in organizational, architecture, and product verification to determine whether a product is Verified, Conditionally Verified, or Returned. The organizational baseline score is a combination of two elements: a minimum score for each question in a specific subset that are deemed critical, and an overall maturity score.

The minimum required overall organizational maturity score to meet the baseline for verification is 1.20.

The tables below outline the critical subset of questions and the minimum required score in each to contribute to the baseline score. A sufficient score in the remaining questions to get to an overall maturity score is also needed to meet the organizational baseline.

Business Function |

Question |

Baseline Score |

|---|---|---|

Governance |

Do you understand the enterprise-wide risk appetite for your applications? |

0.50 |

Governance |

Do you have and apply a common set of policies and standards throughout your organization? |

1.00 |

Governance |

Do you have a complete picture of your external compliance obligations? |

1.00 |

Governance |

Do you have a standard set of security requirements and verification procedures addressing the organization’s external compliance obligations? |

0.50 |

Governance |

Have you identified a security champion for each development team? |

0.50 |

Business Function |

Question |

Baseline Score |

|---|---|---|

Design |

Do you identify and manage architectural design flaws with threat modeling? |

0.50 |

Design |

Do project teams specify security requirements during development? |

0.50 |

Design |

Do teams use security principles during design? |

0.50 |

Design |

Do you evaluate the security quality of important technologies used for development? |

0.50 |

Design |

Do you have a list of recommended technologies for the organization? |

0.50 |

Design |

Do you enforce the use of recommended technologies within the organization? |

0.50 |

Business Function |

Question |

Baseline Score |

|---|---|---|

Implementation |

Is your full build process formally described? |

0.50 |

Implementation |

Do you have solid knowledge about dependencies you’re relying on? |

0.50 |

Implementation |

Do you handle third party dependency risk by a formal process? |

0.25 |

Implementation |

Do you use repeatable deployment processes? |

1.00 |

Implementation |

Do you consistently validate the integrity of deployed artifacts? |

0.25 |

Implementation |

Do you limit access to application secrets according to the least privilege principle? |

0.50 |

Implementation |

Do you track all known security defects in accessible locations? |

1.00 |

Business Function |

Question |

Baseline Score |

|---|---|---|

Verification |

Do you review the application architecture for mitigations of typical threats on an ad-hoc basis? |

0.25 |

Verification |

Do you test applications for the correct functioning of standard security controls? |

0.50 |

Verification |

Do you consistently write and execute test scripts to verify the functionality of security requirements? |

0.25 |

Verification |

Do you scan applications with automated security testing tools? |

0.50 |

Verification |

Do you customize the automated security tools to your applications and technology stacks? |

0.25 |

Verification |

Do you manually review the security quality of selected high-risk components? |

0.50 |

Verification |

Do you understand the enterprise-wide risk appetite for your applications? |

0.50 |

Business Function |

Question |

Baseline Score |

|---|---|---|

Operations |

Do you analyze log data for security incidents periodically? |

0.50 |

Operations |

Do you follow a documented process for incident detection? |

1.00 |

Operations |

Do you respond to detected incidents? |

1.00 |

Operations |

Do you use a repeatable process for incident handling? |

0.50 |

Operations |

Do you have a dedicated incident response team available? |

0.25 |

Operations |

Do you harden configurations for key components of your technology stacks? |

0.50 |

Operations |

Do you have hardening baselines for your components? |

0.50 |

Operations |

Do you identify and patch vulnerable components? |

1.00 |

Operations |

Do you follow an established process for updating components of your technology stacks? |

0.50 |

Operations |

Do you protect and handle information according to protection requirements for data stored and processed on each application? |

1.00 |

Business Function |

Question |

Baseline Score |

|---|---|---|

Human Factors |

Do you have a formal feedback loop with your customers? |

1.00 |

Human Factors |

Do you perform automated accessibility validation during development? |

1.00 |